Today I am happy to announce the release of H2O version 1.6.1. The release fixes two build-related issues found in 1.6.0 (link: release notes).

Among them, one is a build error on ARMv8-A, i.e. the 64-bit ARM architecture.

Yes, we now officially support 64-bit ARM!

In late 2015 we have started to see 64bit ARM-based servers / appliances hit the market, and it is likely that we would see more in the coming year.

I hope that H2O will be the best HTTP server for the platform.

EDIT. Personally I am enjoying using Geekbox, a sub-$100 microserver with an octa-core Cortex-A53 processor running Ubuntu :)

Friday, December 18, 2015

Friday, December 4, 2015

H2O HTTP/2 server version 1.6.0 released

Today, I have tagged version 1.6.0 of the H2O HTTP/2 server.

Full list of changes can be found in the release note, but following are the significant ones as I believe.

Error pages are now customizable #606

Finally, error pages can be customized.

You can specify any URL; the contents of the specified URL will be sent to the client when returning an error response. Please consult the document of the errordoc handler for more information.

Support for WebSocket #581

Thanks to Justin Zhu, H2O standalone server is now capable of proxying WebSocket connections.

The feature is considered experimental for the time being and you would need to turn it on explicitly if you need to use it. We will turn the feature on by default in future releases.

Smart file descriptor caching #596

The standalone server now caches open file descriptors, so that serving small static files is significantly faster in version 1.6 when compared to 1.5.

Website administrators need not worry about stale files getting served; the cached file descriptors are closed every once the event loop is being run. In other words, requests that arrive after a file is being modified are guaranteed to have the modified version being served.

Hopefully I will post a benchmark covering the changes in an upcoming blogpost.

HTTP/2 push triggered by mruby is processed ASAP #593

In prior releases, pushed responses were not sent until the response to the request that triggered the push become ready. In 1.6.0, the behavior has been changes so that push triggered by mruby is actually sent while the request is processed by an application server.

The change has noticeable positive impact on user experience, please consult Optimizing performance of multi-tier web applications using HTTP/2 push.

PS. and if you are interested in using H2O with a certificate issued by Let's Encrypt, please refer to Using H2O with Let's Encrypt.

Have fun!

Full list of changes can be found in the release note, but following are the significant ones as I believe.

Error pages are now customizable #606

Finally, error pages can be customized.

You can specify any URL; the contents of the specified URL will be sent to the client when returning an error response. Please consult the document of the errordoc handler for more information.

Support for WebSocket #581

Thanks to Justin Zhu, H2O standalone server is now capable of proxying WebSocket connections.

The feature is considered experimental for the time being and you would need to turn it on explicitly if you need to use it. We will turn the feature on by default in future releases.

Smart file descriptor caching #596

The standalone server now caches open file descriptors, so that serving small static files is significantly faster in version 1.6 when compared to 1.5.

Website administrators need not worry about stale files getting served; the cached file descriptors are closed every once the event loop is being run. In other words, requests that arrive after a file is being modified are guaranteed to have the modified version being served.

Hopefully I will post a benchmark covering the changes in an upcoming blogpost.

HTTP/2 push triggered by mruby is processed ASAP #593

In prior releases, pushed responses were not sent until the response to the request that triggered the push become ready. In 1.6.0, the behavior has been changes so that push triggered by mruby is actually sent while the request is processed by an application server.

The change has noticeable positive impact on user experience, please consult Optimizing performance of multi-tier web applications using HTTP/2 push.

PS. and if you are interested in using H2O with a certificate issued by Let's Encrypt, please refer to Using H2O with Let's Encrypt.

Have fun!

Thursday, December 3, 2015

Optimizing performance of multi-tier web applications using HTTP/2 push

Push is a feature of HTTP/2, that allows a server to speculatively send response to a client, anticipating that the client will use the response.

In my earlier blogpost, I wrote that HTTP/2 push does not have significant effect on web performance when serving static files from a single HTTP/2 server. While that is true, push does improve performance by noticeable margin in other scenarios. Let's look into one common case.

The Theory

Many if not most of today's web applications are multi-tiered. Typically, an HTTP request from a client is first accepted by an httpd (either operated by the provider of the web service or by a CDN operator). The httpd serves asset files by itself, while routing requests for HTML documents to application server through fastcgi or HTTP/1.

It is when the response from the application server takes time that HTTP/2 push gives us a big performance boost.

The chart below should be a clear explanation why. With HTTP/2 push, it has become possible for a server to start sending assets that are going to be referred from HTML, before the generated HTML is returned from the application running behind.

It is not uncommon for an web application to spend hundreds of milliseconds processing an HTTP request, querying and updating the database. It is also common for a CDN edge server to wait for hundreds of milliseconds fetching a HTTP response from an web application server through an inter-continental connection.

In case of the chart, RTT between httpd and client is 50ms and the processing time is 200ms. Therefore, the server is capable of spending 4 round-trips (or typically slightly above 200KB of bandwidth1) for pushing asset files before HTML becomes ready to be served.

And thanks to push transactions, the connection will be warm enough by the time when HTML becomes available to the web server, so that the chance of the server being able to send whole document at once becomes bigger.

Theoretically, the upper bound of time reducible by the proposed approach (i.e. push assets until the main document becomes ready) is:

Cache-aware Server Push

Even in case you have a time window that can be used to push few hundred kilobytes of data, you would definitely not want to waste the bandwidth by pushing responses already cached by the client.

That is why cache-aware server-pusher (CASPER) becomes important.

Initially implemented as an experimental feature in H2O HTTP2 server version 1.5, CASPER tracks the cache state of the web browser using a single Cookie2. The cookie contains a fingerprint of all the high-priority asset files being cached by the browser compressed using Golomb-compressed sets. H2O updates the fingerprint every time it serves a high-priority asset file, as well as for determining whether certain asset files should be pushed or not.

It should be noted that the current fingerprint maintained by the cookie is at best a poor estimate of what is being cached by the client. Without a way to peek into the web browser cache, we cannot update the fingerprint stored in the cookie to reflect evictions from the cache2. Ideally, web browsers should calculate the fingerprint by itself and send the value to the server. But until then, we have to live with using cookies (or a ServiceWorker-based implementation that would give us freedom in implementing our own cache3) as a hacky workaround.

Benchmark

Let's move on to an experiment to verify if the theory can be applied in practice.

For the purpose, I am using the top page of h2o.examp1e.net. The server (H2O version 1.6.0-beta2 with CASPER enabled; see configuration) is given 50ms simulated latency using

FWIW, the size of the responses being served are as follows:

Blocking assets are CSS and JavaScript files that block the critical rendering path (i.e. the files that need to be obtained by the browser before rendering the webpage). Non-blocking assets are asset files that do not block the critical path (e.g. images).

Next two figures are the charts shown by the Chrome's Developer Tools. In the former, none of the responses were pushed. In the latter, blocking assets were pushed using CASPER.

As can be seen, both

Conclusion

As shown in this blogpost, cache-aware server push can be used by a reverse proxy to push assets while waiting for the backend application server to provide dynamically generated content, effectively hiding the processing time of the application server. Or in case of CDN, it can be used to hide the latency between the edge server and the application server.

Considering how common it is the case that the processing time of an web application (or the RTT between an edge server and an application server) is greater than the RTT between the client and the reverse proxy (or the edge server in case of CDN), we can expect cache-aware server push to provide noticeable improvement to web performance in many deployments.

EDIT: This blogpost is written as part of the http2 Advent Calendar 2015 (mostly in Japanese).

In my earlier blogpost, I wrote that HTTP/2 push does not have significant effect on web performance when serving static files from a single HTTP/2 server. While that is true, push does improve performance by noticeable margin in other scenarios. Let's look into one common case.

The Theory

Many if not most of today's web applications are multi-tiered. Typically, an HTTP request from a client is first accepted by an httpd (either operated by the provider of the web service or by a CDN operator). The httpd serves asset files by itself, while routing requests for HTML documents to application server through fastcgi or HTTP/1.

It is when the response from the application server takes time that HTTP/2 push gives us a big performance boost.

The chart below should be a clear explanation why. With HTTP/2 push, it has become possible for a server to start sending assets that are going to be referred from HTML, before the generated HTML is returned from the application running behind.

It is not uncommon for an web application to spend hundreds of milliseconds processing an HTTP request, querying and updating the database. It is also common for a CDN edge server to wait for hundreds of milliseconds fetching a HTTP response from an web application server through an inter-continental connection.

In case of the chart, RTT between httpd and client is 50ms and the processing time is 200ms. Therefore, the server is capable of spending 4 round-trips (or typically slightly above 200KB of bandwidth1) for pushing asset files before HTML becomes ready to be served.

And thanks to push transactions, the connection will be warm enough by the time when HTML becomes available to the web server, so that the chance of the server being able to send whole document at once becomes bigger.

Theoretically, the upper bound of time reducible by the proposed approach (i.e. push assets until the main document becomes ready) is:

time_reducedmax = processing_time + 1 RTT

The additional 1 RTT appears if HTML being delivered is small that it is incapable of growing the send window in the pull case. time_reducedmin is obviously zero, when no resource that can be pushed exists.Cache-aware Server Push

Even in case you have a time window that can be used to push few hundred kilobytes of data, you would definitely not want to waste the bandwidth by pushing responses already cached by the client.

That is why cache-aware server-pusher (CASPER) becomes important.

Initially implemented as an experimental feature in H2O HTTP2 server version 1.5, CASPER tracks the cache state of the web browser using a single Cookie2. The cookie contains a fingerprint of all the high-priority asset files being cached by the browser compressed using Golomb-compressed sets. H2O updates the fingerprint every time it serves a high-priority asset file, as well as for determining whether certain asset files should be pushed or not.

It should be noted that the current fingerprint maintained by the cookie is at best a poor estimate of what is being cached by the client. Without a way to peek into the web browser cache, we cannot update the fingerprint stored in the cookie to reflect evictions from the cache2. Ideally, web browsers should calculate the fingerprint by itself and send the value to the server. But until then, we have to live with using cookies (or a ServiceWorker-based implementation that would give us freedom in implementing our own cache3) as a hacky workaround.

Benchmark

Let's move on to an experiment to verify if the theory can be applied in practice.

For the purpose, I am using the top page of h2o.examp1e.net. The server (H2O version 1.6.0-beta2 with CASPER enabled; see configuration) is given 50ms simulated latency using

tc qdisc, and a web application that returns index.html with 200ms latency is placed behind the server. Google Chrome 46 is used as the test client.FWIW, the size of the responses being served are as follows:

| File type | Size |

|---|---|

| index.html | 3,619 bytes |

| blocking assets | 319,700 bytes (5 files) |

| non-blocking assets | 415,935 bytes (2 files) |

Next two figures are the charts shown by the Chrome's Developer Tools. In the former, none of the responses were pushed. In the latter, blocking assets were pushed using CASPER.

As can be seen, both

DOMContentLoaded and load events are observed around 230 msec earlier when push is being used; which matches the expectation that we would see an improvement of 200 msec to 250 msec.| Event | Without Push (msec) | With Push (msec) | Delta (msec) | Gain |

|---|---|---|---|---|

| DOMContentLoaded | 823 | 595 | 228 | 38% |

| load | 1,010 | 775 | 235 | 30% |

Conclusion

As shown in this blogpost, cache-aware server push can be used by a reverse proxy to push assets while waiting for the backend application server to provide dynamically generated content, effectively hiding the processing time of the application server. Or in case of CDN, it can be used to hide the latency between the edge server and the application server.

Considering how common it is the case that the processing time of an web application (or the RTT between an edge server and an application server) is greater than the RTT between the client and the reverse proxy (or the edge server in case of CDN), we can expect cache-aware server push to provide noticeable improvement to web performance in many deployments.

1: in common case where INITCWND is 10 and MSS is around 1,400 bytes, it is possible to send 150 packets in 4 RTT, reaching 210KB in total

2: fortunately, existence of false-positives in the fingerprint is not a big issue, since the client can simply revert to using ordinary GET request in case push is not used

3: ongoing work is explained in HTTP/2 Push を Service Worker + Cache Aware Server Push で効率化したい話 - Block Rockin' Codes

4: Chromes' timing chart shows pushed streams as being fetched when they are actually being adopted after received

2: fortunately, existence of false-positives in the fingerprint is not a big issue, since the client can simply revert to using ordinary GET request in case push is not used

3: ongoing work is explained in HTTP/2 Push を Service Worker + Cache Aware Server Push で効率化したい話 - Block Rockin' Codes

4: Chromes' timing chart shows pushed streams as being fetched when they are actually being adopted after received

EDIT: This blogpost is written as part of the http2 Advent Calendar 2015 (mostly in Japanese).

Wednesday, December 2, 2015

Using H2O with Let's Encrypt

Let's Encrypt is a new certificate authority that is going to issue certificates for free using automated validation process. They have announced that they will enter public beta on Dec. 3rd 2015.

This blogpost explains how to setup a H2O using the automated process.

Step 1. Install the client

Step 2. Obtain the certificate

If you already have a web server listening to port 80, then run:

Or if you do not have a web server listening on the server, then run:

Step 3. Configure H2O

Setup the configuration file of H2O to use the respective certificate and key files.

That's all. Pretty simple, isn't it?

Kudos to the people behind Let's Encrypt for providing all of these (for free).

For more information, please consult documents on letsencrypt.org and h2o.examp1e.net.

This blogpost explains how to setup a H2O using the automated process.

Step 1. Install the client

% git clone https://github.com/letsencrypt/letsencrypt.git

Step 2. Obtain the certificate

If you already have a web server listening to port 80, then run:

% cd letsencrypt

% ./letsencrypt-auto certonly --webroot \

--webroot-path $DOCROOT \

--email $EMAIL \

--domain $HOST1

$DOCROOT should be the path of the web sever's document root. $EMAIL should be the email address of the website administrator. $HOST should be the hostname of the web server (also the name for which a new certificate will be issued).Or if you do not have a web server listening on the server, then run:

% cd letsencrypt

% ./letsencrypt-auto certonly --standalone \

--email $EMAIL \

--domain $HOSTNAME

Issued certificate and automatically-generated private key will be stored under /etc/letsencrypt/live/$HOSTNAME.Step 3. Configure H2O

Setup the configuration file of H2O to use the respective certificate and key files.

listen:

port: 443

ssl:

certificate-file: /etc/letsencrypt/live/$HOSTNAME/fullchain.pem

key-file: /etc/letsencrypt/live/$HOSTNAME/privkey.pem

Do not forget to replace $HOSTNAMEs within the snippet with your actual hostname.That's all. Pretty simple, isn't it?

Kudos to the people behind Let's Encrypt for providing all of these (for free).

For more information, please consult documents on letsencrypt.org and h2o.examp1e.net.

1: you may also need to use

--server option to obtain a production-ready certificate during the beta processWednesday, November 11, 2015

mruby で同期呼出を非同期化する話(もしくは H2O の mruby ハンドラでネットワークアクセスする話)

■背景

H2Oではバージョン1.5より、mrubyを用い、Rackのインターフェイスに則った形でハンドラを書けるようになっています。

この機能を提供している目的は、正規表現による書き換え等を用いる複雑な設定ファイルではなくプログラミング言語を用いることで、ウェブサーバの設定をより簡潔に拡張しやすくするためです(Apacheのmod_rubyやmod_perlのようにウェブアプリケーションをウェブサーバ内で実行可能にすることではありません)。

とは言っても、現実のウェブサーバの設定においては、外部のデータベース等に問い合わせた結果に基づいたルーティングが必要になることがあります。

H2Oのようなイベントドリブンなウェブサーバ上で動作する、同期モデルを採用するRackインターフェイスを用いて記述されるハンドラ内において、データベースへの問い合わせをどのように実現すれば良いか。問い合わせが同期的だと、その間ウェブサーバの処理が止まってしまうので、Rubyで問い合わせ関数が呼ばれたタイミングで、ウェブサーバ側に処理を戻したいわけです。

そんなこんなで

その後、考えたところ、

■Fiberを使って、同期コールを非同期化するPoC

ざっと、以下のような感じになります。Rack ハンドラ自体を Fiber 内に置き、その入出力と、非同期化したい関数(ここでは

やろうと思えばできることはわかりました。しかし、この手法には制限が2点あります。

■プロトコルバインディングの実装手法

さて、これで行けそうだということは分かったのですが、可能であることと、それが良いアプローチであることが等価であるとは限りません。そもそも、プロトコルバインディングはどのように書かれるべきなのでしょうか。2種類に大別してプロコンを書きたいと思います。

個人的には、rubyでバインディングを書くアプローチが好みです。速度が遅いかも…という点については、Perl IO を用いた HTTP 実装を推進してきた立場から言うと、スクリプト言語のI/Oレイヤの負荷はネットワーク通信を行うプログラムにおいては多くの場合問題にならないと考えます。問題になるとすれば、通信データのパーサですが、ここのみをネイティブコード化するという手法で十分に対応できることは、Plack や Furl に慣れた Perl プログラマであれば納得できる話かと思いますし、(m)ruby においても同等かと思います。

■まとめ

長くなりましたが、H2O (あるいはイベントドリブンなプログラム一般)から、同期的に書かれたネットワーククライアントを呼び出す mruby スクリプトを起動する方法については、

H2Oではバージョン1.5より、mrubyを用い、Rackのインターフェイスに則った形でハンドラを書けるようになっています。

この機能を提供している目的は、正規表現による書き換え等を用いる複雑な設定ファイルではなくプログラミング言語を用いることで、ウェブサーバの設定をより簡潔に拡張しやすくするためです(Apacheのmod_rubyやmod_perlのようにウェブアプリケーションをウェブサーバ内で実行可能にすることではありません)。

とは言っても、現実のウェブサーバの設定においては、外部のデータベース等に問い合わせた結果に基づいたルーティングが必要になることがあります。

H2Oのようなイベントドリブンなウェブサーバ上で動作する、同期モデルを採用するRackインターフェイスを用いて記述されるハンドラ内において、データベースへの問い合わせをどのように実現すれば良いか。問い合わせが同期的だと、その間ウェブサーバの処理が止まってしまうので、Rubyで問い合わせ関数が呼ばれたタイミングで、ウェブサーバ側に処理を戻したいわけです。

そんなこんなで

そのとおりです。mrubyの構造上、Cライブラリ呼出のタイミングで非同期化するの難しそう>「mrubyのコード中でredisへのアクセスが発生した際にh2oがそのスレッドを開放できず、イベントループの恩恵が得られてない」 https://t.co/suTAf8PatM

— Kazuho Oku (@kazuho) November 10, 2015@kazuho むむむ、どういう改善をすれば「恩恵を得られる」ようになりますかね。改善する意志はあります。

— Yukihiro Matsumoto (@yukihiro_matz) November 10, 2015@yukihiro_matz @kazuho CからFiberいじれるようにするとかそういう話ですかね。

— MATSUMOTO, Ryosuke (@matsumotory) November 10, 2015@matsumotory @kazuho yieldとresumeはできますが。後はなにが必要ですか? 作るのはC関数に再入できない問題がありますが、resume同様returnでしか呼べない制限をつければ可能ですね

— Yukihiro Matsumoto (@yukihiro_matz) November 10, 2015その後、考えたところ、

@matsumotory @yukihiro_matz rack handlerをfiber内から呼ぶようにすれば、ひょっとしてmrubyに手を入れずに対応できるんじゃないかと考えています

— Kazuho Oku (@kazuho) November 10, 2015■Fiberを使って、同期コールを非同期化するPoC

ざっと、以下のような感じになります。Rack ハンドラ自体を Fiber 内に置き、その入出力と、非同期化したい関数(ここでは

DB#query)が呼ばれたタイミングで Fiber.yield を呼ぶことで、メインループ(これは実際には C で書くことになる)へ制御を戻しています。# DB class that calls yield

class DB

def query

return Fiber.yield ["db#query"]

end

end

# the application, written as an ordinary Rack handler

app = lambda {|env|

p "received request to #{env["PATH_INFO"]}"

[200, {}, ["hello " + DB.new.query]]

}

# fiber that runs the app

runner = Fiber.new {

req = Fiber.yield

while 1

resp = app.call(req)

req = Fiber.yield ["response", resp]

end

}

runner.resume

# the app to be written in C

msg = {"PATH_INFO"=> "/abc"} # set request obj

while 1

status = runner.resume(msg)

if status[0] == "response"

resp = status[1]

break

elsif status[0] == "db#query"

# is a database query, return the result

msg = ""

else

raise "unexpected status:#{status[0]}"

end

end

p "response:" + resp[2].join("") やろうと思えばできることはわかりました。しかし、この手法には制限が2点あります。

- fiber 内からしか呼べない - それでいいのか?

- fiber 内で、Cコードを経由して呼ばれた ruby コードから Fiber.yield できない

■プロトコルバインディングの実装手法

さて、これで行けそうだということは分かったのですが、可能であることと、それが良いアプローチであることが等価であるとは限りません。そもそも、プロトコルバインディングはどのように書かれるべきなのでしょうか。2種類に大別してプロコンを書きたいと思います。

- Cライブラリのラッパーを書く

- Cライブラリが、非同期モデルをサポートしている必要がある

- イベントループ (libuv, libev, ...) 毎に対応が必要

- プロトコルを実装しなくて良い

- rubyでバインディングを書く

- プロトコルを実装する必要がある

- rubyで書ける!

- 各バックエンド (libuv, libev, ngx_mruby, h2o, ...) が同じ ruby API (TCPSocketのサブセットで良いと思う) を提供すれば、イベントループ毎の対応が不要

- Cより遅いかも…

個人的には、rubyでバインディングを書くアプローチが好みです。速度が遅いかも…という点については、Perl IO を用いた HTTP 実装を推進してきた立場から言うと、スクリプト言語のI/Oレイヤの負荷はネットワーク通信を行うプログラムにおいては多くの場合問題にならないと考えます。問題になるとすれば、通信データのパーサですが、ここのみをネイティブコード化するという手法で十分に対応できることは、Plack や Furl に慣れた Perl プログラマであれば納得できる話かと思いますし、(m)ruby においても同等かと思います。

■まとめ

長くなりましたが、H2O (あるいはイベントドリブンなプログラム一般)から、同期的に書かれたネットワーククライアントを呼び出す mruby スクリプトを起動する方法については、

- 同期的に記述されたアプリケーションを Fiber を使ったラッパーで非同期化する

- ホストプログラムは、Fiber を通じて、TCPSocket と互換性のある同期ソケット API を提供する

- プロトコルバインディングは、Rubyで(もしくは、Ruby の TCPSocket と C で書かれたプロトコルパーサを組み合わせて)提供する

Friday, November 6, 2015

ソート済の整数列を圧縮する件

圧縮されたソート済の整数列ってのは汎用的なデータ構造で、たとえば検索エンジンの転置インデックスとか、いろんなところで使うわけです。で、検索エンジンの場合は速度重要なので、PForDeltaとか様々なデータ構造が研究されてる。

一方、H2O には、ブラウザキャッシュに載ってない js や css をサーバプッシュする仕組み「cache-aware server push」があって、何がキャッシュされているか判定するためにブルームフィルタを全ての HTTP リクエストに含める必要がある。

で、ブルームフィルタを圧縮しようと思うと、ブルームフィルタってのはソート済の整数列として表現できるので、これを圧縮しようって話になる。

検索エンジン等で使う場合は速度重要だけど、HTTPリクエストに載せる場合は空間効率のほうが重要になる。ってことで、空間効率が理論限界に近いゴロム符号(の特殊系であるライス符号)を使うことになる。

ってことで、作ったのがgithub.com/kazuho/golombset。

今週ちょっとcodecをいじって、あと気軽に試せるようにコマンドラインインターフェイスを追加した。

なので、こいつを git clone して make して、以下のような感じで使うことができる。

(100, 155, 931) というソート済の整数列をエンコード

同じ整数列をエンコードしてデコード

(100,155,931)という3つの数値を含むソート済の整数列を5バイトにエンコードできていることがわかる。

もうちょっと実際的な例として、偽陽性が1/100の確率で発生するブルームフィルタに、100個の要素を突っ込むんでエンコードすることを考える。適当にランダムな値を用いてそのようなフィルタを作成しエンコードしてみると、結果が102バイトであることがわかる。

つまり、CSSやJavaScriptのような、ブラウザのレンダリングにクリティカルな影響を与えるファイルが100個あるとして、それらがウェブブラウザのキャッシュ内に存在するかを判定するためのブルームフィルタをHTTPリクエストに添付するためのオーバーヘッドは100バイト程度である、ということになる。さらに、リクエストを2回以上繰り返す場合は、HPACKによる圧縮が効く。

以上が、これなら現実的だよねってんで H2O の cache-aware server push は実装されたのでした、という経緯と、それにあわせて作ったライブラリの紹介でした。

それでは、また。

参考:

ImperialViolet - Smaller than Bloom filters

Golomb-coded sets: smaller than Bloom filters (giovanni.bajo.it)

一方、H2O には、ブラウザキャッシュに載ってない js や css をサーバプッシュする仕組み「cache-aware server push」があって、何がキャッシュされているか判定するためにブルームフィルタを全ての HTTP リクエストに含める必要がある。

で、ブルームフィルタを圧縮しようと思うと、ブルームフィルタってのはソート済の整数列として表現できるので、これを圧縮しようって話になる。

検索エンジン等で使う場合は速度重要だけど、HTTPリクエストに載せる場合は空間効率のほうが重要になる。ってことで、空間効率が理論限界に近いゴロム符号(の特殊系であるライス符号)を使うことになる。

ってことで、作ったのがgithub.com/kazuho/golombset。

今週ちょっとcodecをいじって、あと気軽に試せるようにコマンドラインインターフェイスを追加した。

なので、こいつを git clone して make して、以下のような感じで使うことができる。

% (echo 100; echo 155; echo 931) | ./golombset --encode | od -t x1 0000000 41 90 6d c0 ff 0000005

% (echo 100; echo 155; echo 931) | ./golombset --encode | ./golombset --decode 100 155 931

(100,155,931)という3つの数値を含むソート済の整数列を5バイトにエンコードできていることがわかる。

もうちょっと実際的な例として、偽陽性が1/100の確率で発生するブルームフィルタに、100個の要素を突っ込むんでエンコードすることを考える。適当にランダムな値を用いてそのようなフィルタを作成しエンコードしてみると、結果が102バイトであることがわかる。

% perl -MList::MoreUtils=uniq -e 'my @a = (); while (@a < 100) { @a = uniq sort { $a <=> $b } (@a, int rand(10000)); } print "$_\n" for @a' | ./golombset --encode | wc -c

102

つまり、CSSやJavaScriptのような、ブラウザのレンダリングにクリティカルな影響を与えるファイルが100個あるとして、それらがウェブブラウザのキャッシュ内に存在するかを判定するためのブルームフィルタをHTTPリクエストに添付するためのオーバーヘッドは100バイト程度である、ということになる。さらに、リクエストを2回以上繰り返す場合は、HPACKによる圧縮が効く。

以上が、これなら現実的だよねってんで H2O の cache-aware server push は実装されたのでした、という経緯と、それにあわせて作ったライブラリの紹介でした。

それでは、また。

参考:

ImperialViolet - Smaller than Bloom filters

Golomb-coded sets: smaller than Bloom filters (giovanni.bajo.it)

Thursday, October 22, 2015

Performance improvements with HTTP/2 push and server-driven prioritization

tl;dr

HTTP/2 push only marginally improves web-site performance (even when it does). But it might provide better user experience over mobile networks with TCP middleboxes.

Introduction

Push is an interesting feature of HTTP/2.

By using push, HTTP servers can start sending certain asset files that block rendering (e.g. CSS and script files) before the web browser issues requests for such assets. I have heard (or spoken myself of) anticipations that by doing so we might be able to cut down the response time of the Web.

CASPER (cache-aware server pusher)

The biggest barrier in using HTTP/2 push has so far been considered cache validation.

For the server to start pushing asset files, it needs to be sure that the client does not already have the asset cached. You would never want to push a asset file that is already been cached by the client - doing so not only will waste the bandwidth but also cause negative effect on response time. But how can a server determine the cache state of the client without spending an RTT asking to the client?

That's were CASPER comes in.

CASPER (abbreviation for cache-aware server pusher) is a function introduced in H2O version 1.5, that tracks the cache state of the web browser using a single Cookie. The cookie contains a fingerprint of all the high-priority asset files being cached by the browser compressed using Golomb-compressed sets.

The cookie is associated with every request sent by the client1. So when the server receives a request to HTML, it can immediately determine whether or not the browser is in possession of the blocking assets (CSS and script files) required to render the requested HTML. And it can push the only files that are known not to be cached.

With CASPER, it has now become practical to use HTTP/2 push for serving asset files.

Using HTTP/2 push in H2O

This week, I have started using CASPER on h2o.examp1e.net - the tiny official site of H2O.

The configuration looks like below. The mruby handler initiates push of JavaScript and CSS files if the request is against the top page or one of the HTML documents, and then (by using

The benchmark

With the setting above (with

First, let's look at the first two rows that have

Next, let's compare the first two rows (push:off) with the latter two (push:off). It is interesting that

The fact will have both positive and negative effects to user experience; the positive side is that time user sees a blank screen decreases substantially (the red section - time spent after unload before first-paint). The negative side is that users would need to wait longer until he/she knows that the server has responded (by the browser starting to render the next page).

It is not surprising that turning on push only somewhat improves the first-paint timing compared to both being turned off; the server is capable of sending more CSS and JavaScript before it receives request for image files, and start interleaving the responses with them.

On the other hand, it might be surprising that using push together with reprioritization did not cause any differences. The reason is simple; in this scenario, transferring the necessary assets and the <head> section of the HTML (in total about 320KB) required about 10 round trips (including overhead required by TCP, TLS, HTTP/2). With this much roundtrips, the merit of push can hardly be observed; considering the fact that push is technique to eliminate one round trip necessary for the browser to issue requests for the blocking assets4.

Conclusion

The benchmark reinforces the claims made by some that HTTP2 push will only have marginal effect on web performance5. The results have been consistent with expectations that using push will only optimize the web performance by 1 RTT at maximum, and it would be hard to observe the difference considering the effect of TCP slow start and how many roundtrips are usually required to render a web page.

This means to the users of H2O (with reprioritization turned on by default) that they can expect near-best performance without using push.

On the other hand, we may still need to look at networks having TCP proxies. As discussed in Why TCP optimisation has become more important than content optimization (devcentral.f5.com) some mobile carriers do seem to have such middlebox installed.

Existence of such device is generally a good thing since it not only reduces packet retransmits but also improves TCP bandwidth during the slow-start phase. But the downside is that their existence usually increase application-level RTT, since they expand the amount of data in-flight, which has a negative impact on the responsiveness of HTTP/2. HTTP/2 push will be a good optimization under such network conditions.

Notes:

1. the fingerprint contained in the Cookie header is efficiently compressed by HPACK

2. wpbench was used to collect the numbers; first-paint was calculated as

3. starting from H2O version 1.5,

4. at 10 RTT it is unlikely that we have hit the maximum network bandwidth, and that means that packets will be received by the browser in batch every RTT

5. there are use cases for HTTP/2 push other than pushing asset files

HTTP/2 push only marginally improves web-site performance (even when it does). But it might provide better user experience over mobile networks with TCP middleboxes.

Introduction

Push is an interesting feature of HTTP/2.

By using push, HTTP servers can start sending certain asset files that block rendering (e.g. CSS and script files) before the web browser issues requests for such assets. I have heard (or spoken myself of) anticipations that by doing so we might be able to cut down the response time of the Web.

CASPER (cache-aware server pusher)

The biggest barrier in using HTTP/2 push has so far been considered cache validation.

For the server to start pushing asset files, it needs to be sure that the client does not already have the asset cached. You would never want to push a asset file that is already been cached by the client - doing so not only will waste the bandwidth but also cause negative effect on response time. But how can a server determine the cache state of the client without spending an RTT asking to the client?

That's were CASPER comes in.

CASPER (abbreviation for cache-aware server pusher) is a function introduced in H2O version 1.5, that tracks the cache state of the web browser using a single Cookie. The cookie contains a fingerprint of all the high-priority asset files being cached by the browser compressed using Golomb-compressed sets.

The cookie is associated with every request sent by the client1. So when the server receives a request to HTML, it can immediately determine whether or not the browser is in possession of the blocking assets (CSS and script files) required to render the requested HTML. And it can push the only files that are known not to be cached.

With CASPER, it has now become practical to use HTTP/2 push for serving asset files.

Using HTTP/2 push in H2O

This week, I have started using CASPER on h2o.examp1e.net - the tiny official site of H2O.

The configuration looks like below. The mruby handler initiates push of JavaScript and CSS files if the request is against the top page or one of the HTML documents, and then (by using

399 status code) delegates the request to the next handler (defined by file.dir directive) that actually returns a static file. http2-casper directive is used to turn CASPER on, so that the server will discard push attempts initiated by the mruby handler for assets that are likely cached. http2-reprioritize-blocking-assets is a performance tuning option that raises the priority of blocking assets to highest for web browsers that do not."h2o.examp1e.net:443":

paths:

"/":

mruby.handler: |

lambda do |env|

push_paths = []

if /(\/|\.html)$/.match(env["PATH_INFO"])

push_paths << "/search/jquery-1.9.1.min.js"

push_paths << "/search/oktavia-jquery-ui.js"

push_paths << "/search/oktavia-english-search.js"

push_paths << "/assets/style.css"

push_paths << "/assets/searchstyle.css"

end

return [

399,

push_paths.empty? ?

{} :

{"link" => push_paths.map{|p| "<#{p}>; rel=preload"}.join("\n")},

[]

]

end

file.dir: /path/to/doc-root

http2-casper: ON

http2-reprioritize-blocking-assets: ONThe benchmark

With the setting above (with

http2-reprioritize-blocking-assets both OFF and ON), I have measured unload, first-paint, and load timings2 using Google Chrome 46, and the numbers are as follows. The RTT between the server and the client was ~25 milliseconds. The results were mostly reproducible between multiple attempts.First, let's look at the first two rows that have

push turned off. It is evident that reprio:on3 starts rendering the response 50 milliseconds earlier (2 RTT). This is because unless the option is turned on, the priority tree created by Chrome instructs the web browser to interleave responses containing CSS / JavaScript with those containing image files.Next, let's compare the first two rows (push:off) with the latter two (push:off). It is interesting that

unload timings have moved towards right. This is because when push is turned on, the contents of the asset files are sent before the contents of the HMTL. Since web browsers unload the previous page when it receives the first octets of the HTML file, using HTTP/2 push actually increases the time spent until the previous page is unloaded.The fact will have both positive and negative effects to user experience; the positive side is that time user sees a blank screen decreases substantially (the red section - time spent after unload before first-paint). The negative side is that users would need to wait longer until he/she knows that the server has responded (by the browser starting to render the next page).

It is not surprising that turning on push only somewhat improves the first-paint timing compared to both being turned off; the server is capable of sending more CSS and JavaScript before it receives request for image files, and start interleaving the responses with them.

On the other hand, it might be surprising that using push together with reprioritization did not cause any differences. The reason is simple; in this scenario, transferring the necessary assets and the <head> section of the HTML (in total about 320KB) required about 10 round trips (including overhead required by TCP, TLS, HTTP/2). With this much roundtrips, the merit of push can hardly be observed; considering the fact that push is technique to eliminate one round trip necessary for the browser to issue requests for the blocking assets4.

Conclusion

The benchmark reinforces the claims made by some that HTTP2 push will only have marginal effect on web performance5. The results have been consistent with expectations that using push will only optimize the web performance by 1 RTT at maximum, and it would be hard to observe the difference considering the effect of TCP slow start and how many roundtrips are usually required to render a web page.

This means to the users of H2O (with reprioritization turned on by default) that they can expect near-best performance without using push.

On the other hand, we may still need to look at networks having TCP proxies. As discussed in Why TCP optimisation has become more important than content optimization (devcentral.f5.com) some mobile carriers do seem to have such middlebox installed.

Existence of such device is generally a good thing since it not only reduces packet retransmits but also improves TCP bandwidth during the slow-start phase. But the downside is that their existence usually increase application-level RTT, since they expand the amount of data in-flight, which has a negative impact on the responsiveness of HTTP/2. HTTP/2 push will be a good optimization under such network conditions.

Notes:

1. the fingerprint contained in the Cookie header is efficiently compressed by HPACK

2. wpbench was used to collect the numbers; first-paint was calculated as

max(head-parsed, css-loaded); in this benchmark, DOMContentLoaded timing was indifferent to first-paint3. starting from H2O version 1.5,

http2-reprioritize-blocking-assets option is turned on by default4. at 10 RTT it is unlikely that we have hit the maximum network bandwidth, and that means that packets will be received by the browser in batch every RTT

5. there are use cases for HTTP/2 push other than pushing asset files

Thursday, October 8, 2015

雑なツイートをしてしまったばかりにrubyを高速化するはめになった俺たちは!

逆に言うと、Rubyの文字列型の内部実装がropeになれば、freezeしてもしなくても変わらない速度が出るようになって、結局freezeする必要なんてなかったんやーで丸く収まるんじゃないの?と思いました #雑な感想

— Kazuho Oku (@kazuho) October 6, 2015@kazuho 文字列を弄る話じゃなくて、文字列の identity の話なので、ちょっと関係ないかなぁ、と

— _ko1 (@_ko1) October 6, 2015で、まあ、いくつか気になる点があったので手をつけてしまいました。

1. オブジェクト生成のホットパスの最適化

ホットスポットだとされていたところのコードを読んでると、オブジェクト生成の際に走る関数が割と深いのが問題っぽかった。通常実行されるパスは短いから、それにあわせて最適なコードがはかれるようにコードを調整すれば速くなるはず!!!

とコンセプトコードを書いて投げたら取り込まれた。やったね!!!

と思ったら、ほとんど速くなってないっぽい。これは悲しい…ということで、細かな修正を依頼。

このPR適用すると、問題のマイクロベンチマークが3%〜5%くらい速くなるっぽい。

2. ヒープページのソートをやめる

通常実行される側をいじっても期待ほど速度が上がらなかったので、これは遅い側に原因があるかも…って見ていて気になったのが、ヒープページをソートする処理。現状のRubyは、オブジェクトを格納する「ヒープページ」を16KB単位で確保するんだけど、これを逆参照できるように、アドレスでソートした一覧を持ってる。この構築コストがでかい。

で、これをヒープに書き直してみたところ、rdocを使ったベンチマークで2〜3%の高速化が確認できたので報告。

.@_ko1 気になったので、heap tableを雑にハッシュ化してみましたが、rdocで2〜3%実行時間が減ります(高速化します)ね https://t.co/CJhierZZN3

— Kazuho Oku (@kazuho) October 7, 2015ただ、今日のRubyは、(昔読んだ記事とは異なり)ヒープページが16KB単位でアラインされているということなので、ヒープを使うよりもビットマップを使うべき案件。

3. スイープの最適化

ヒープページのソートを書き直したあとでプロファイラの出力を眺めていたら、GCのスイープ処理が重たいことに気づいた。コードを読んだところ、分岐回数と呼出深度の両面で改善が望めそうだったので、ざっくりやったところ、やはり2〜5%程度実行時間の短縮ができた。ので、これはPRとして報告。

この3つを組み合わせると、rdocみたいな実アプリケーションの実行時間が、手元で5%以上縮みそう!注1 ってことで満足したのがここ二日間の進捗です!!!!!!! なんかいろいろ滞っているような気がしますがすみああおえtぬさおえうh

これからは雑なツイートを慎みたいと思います。

注1: バグがなければ!!

Tuesday, October 6, 2015

[メモ] OS XのホストからVMにnfsでファイル共有

普段OS X上で作業しつつ、開発ディレクトリをOS X上のVMで動いているLinuxやFreeBSDからもアクセスできるようにしてあると、互換性検証がはかどる。

どう設定するか、備忘録をかねてメモ。

1. ゲスト側で通常使用するアカウントのuser-id,group-idを、OS Xのそれに揃える

2. OS Xの/etc/exportsと/etc/nfs.confを以下のように、TCP経由でVMの仮想ネットワークにだけファイルを公開するよう設定

注. VMware Fusion 10の場合は、環境設定から、新しいネットワークを作成し、「NATを使用する」を外し、そのネットワークを使う必要がある。そうしない限り、ホストに見えるTCPのソースアドレスがローカルネットワークのものにならない。

できるだけ単純に構成しようとすると、こんな感じになるかな、と。設定に問題があるようだったら教えてください。

どう設定するか、備忘録をかねてメモ。

1. ゲスト側で通常使用するアカウントのuser-id,group-idを、OS Xのそれに揃える

2. OS Xの/etc/exportsと/etc/nfs.confを以下のように、TCP経由でVMの仮想ネットワークにだけファイルを公開するよう設定

/etc/exports: /shared-dir -mapall=user:group -network netaddr -mask netmask /etc/nfs.conf: nfs.server.udp=0 nfs.server.tcp=13. ゲスト側の/etc/fstabにマウント情報を設定

192.168.140.1:/shared-dir /shared-dir nfs rw,noatime 1 0これで、ホストでも、どのVMでも、

/shared-dirの中身が同じになる。注. VMware Fusion 10の場合は、環境設定から、新しいネットワークを作成し、「NATを使用する」を外し、そのネットワークを使う必要がある。そうしない限り、ホストに見えるTCPのソースアドレスがローカルネットワークのものにならない。

できるだけ単純に構成しようとすると、こんな感じになるかな、と。設定に問題があるようだったら教えてください。

Thursday, October 1, 2015

ウェブページの描画 (first-paint) までの時間を測定するツールを作った件、もしくはHTTP2時代のパフォーマンスチューニングの話

ウェブページの表示までにかかる時間をいかに短くするかってのは、儲かるウェブサイトを構築する上で避けて通れない、とても重要な要素です。

少し古いデータとしては、たとえば、ウェブページの表示が500ミリ秒遅くなると広告売上が1.2%低下するというBingの例なんかも知られているわけです。

「ウェブページの表示までにかかる時間」と言った場合、実際には以下のようないくつかのメトリックがあります。

ウェブサイトチューニングにおいては、

ですが、残念なことに、first-paintまでの時間をAPIを用いて取得できるウェブブラウザは一部に限られています(参照:「Webページ遷移時間のパフォーマンス「First Paint」を計測する方法」)。また、測定にあたって、運用中のウェブページに変更を加えたくない、ということもあったりします。

wpbenchは、これら2点の問題を解消するベンチマークツールです。

wpbenchは、たった1枚のHTMLファイルです。このHTMLファイルを自分のウェブサイトに追加するだけで、そのサイト上の任意のページの表示までにかかる時間が測定可能になります。また、

HTTP/1.1を使っている場合、これらパフォーマンスメトリックは、専らネットワーク状況とウェブブラウザのみに依存して決まる値でした。しかし、HTTP/2では、ウェブブラウザとウェブサーバが恊働して、アセットの転送順序を制御することができるようになった結果、使用するウェブサーバによってパフォーマンスが大幅に変わる、という状況が産まれはじめています。

たとえば、HTTP2サーバ「H2O」のベンチマークページを見ていただくと、ウェブサーバによってfirst-paintまでの時間が倍近く異なるケースがあることがお分かりいただけるかと思います。

既にパフォーマンスチューニング済のウェブサイトを管理している方々も、HTTPS、あるいはHTTP/2への対応にあたり、いま一度、ウェブサイトの表示速度を確認されることをおすすめいたします。

少し古いデータとしては、たとえば、ウェブページの表示が500ミリ秒遅くなると広告売上が1.2%低下するというBingの例なんかも知られているわけです。

「ウェブページの表示までにかかる時間」と言った場合、実際には以下のようないくつかのメトリックがあります。

| イベント | 意味 |

|---|---|

| unload | 現在のページからの離脱。離脱後、first-paintまでは真っ白な表示になります |

| first-paint | ウェブページの初回描画(HTMLの後半や画像は存在しない可能性があります) |

| DOMContentLoaded | ウェブページのレイアウト完了 |

| load (onload) | 画像等を含む全データの表示完了 |

ウェブサイトチューニングにおいては、

- ユーザができるだけ早くウェブページを閲覧し始めることができるよう、first-paintの値を小さくすることを第一の目標注1

- 全データができるだけ早く揃うよう、loadの値を小さくすることを第二の目標

ですが、残念なことに、first-paintまでの時間をAPIを用いて取得できるウェブブラウザは一部に限られています(参照:「Webページ遷移時間のパフォーマンス「First Paint」を計測する方法」)。また、測定にあたって、運用中のウェブページに変更を加えたくない、ということもあったりします。

wpbenchは、これら2点の問題を解消するベンチマークツールです。

wpbenchは、たった1枚のHTMLファイルです。このHTMLファイルを自分のウェブサイトに追加するだけで、そのサイト上の任意のページの表示までにかかる時間が測定可能になります。また、

body.clientHeightをポリングするという力技により、FirefoxやChromeのようなfirst-paint取得のためのAPIを提供しないウェブブラウザにおいても、その値を取得、表示することができます。HTTP/1.1を使っている場合、これらパフォーマンスメトリックは、専らネットワーク状況とウェブブラウザのみに依存して決まる値でした。しかし、HTTP/2では、ウェブブラウザとウェブサーバが恊働して、アセットの転送順序を制御することができるようになった結果、使用するウェブサーバによってパフォーマンスが大幅に変わる、という状況が産まれはじめています。

たとえば、HTTP2サーバ「H2O」のベンチマークページを見ていただくと、ウェブサーバによってfirst-paintまでの時間が倍近く異なるケースがあることがお分かりいただけるかと思います。

既にパフォーマンスチューニング済のウェブサイトを管理している方々も、HTTPS、あるいはHTTP/2への対応にあたり、いま一度、ウェブサイトの表示速度を確認されることをおすすめいたします。

注1: ブラウザによってはfirst-paintのタイミングを取得できないため、DOMContentLoadedを使うこともありますが、DOMContentLoadedには<body>末尾に配置する統計系のスクリプトの読み込みにかかる時間等が加算される点等、注意が必要になります。また、画像なしに閲覧が不可能なサイトについては、Above the foldの値をチューニングする必要があります

Wednesday, September 30, 2015

H2O version 1.5.0 released

Today, I am happy to announce the release of H2O version 1.5.0.

Notable improvements from 1.4 series are as follows:

On-the-fly gzip support

This was a feature requested by many people, and I would like to thank Justin Zhu for doing the hard work!

mruby-based scripting

Server-side scripting using mruby is now considered production level.

And now that the our API is base on Rack, it would be easy for Ruby programmers to use / learn, thanks to its excellent design and documentation.

For this part, my thank you goes to Ryosuke Matsumoto, Masayoshi Takahashi, Masaki TAGAWA.

cache-aware server push

Server-push is an important aspect of HTTP/2, however it has generally believed to be hard to use, since web application do not have the knowledge of what has already been cached on the client-side.

With the help of Ilya Grigorik and the Japanese HTTP/2 community, we have essentially solved the issue by introducing cache-aware server push; the server is now capable of tracking the what the web browser has in its cache, and determine whether or not a resource should be pushed!

We plan to improve the feature in the upcoming releases so that the Web can be even faster!

isolation of private keys

H2O now implements privilege isolation for handling RSA private key operations so that SSL private keys would not leak in case of vulnerabilities such as Heartbleed.

In the upcoming days I will post several blogposts explaining the notable changes. Stay tuned.

Notable improvements from 1.4 series are as follows:

On-the-fly gzip support

This was a feature requested by many people, and I would like to thank Justin Zhu for doing the hard work!

mruby-based scripting

Server-side scripting using mruby is now considered production level.

And now that the our API is base on Rack, it would be easy for Ruby programmers to use / learn, thanks to its excellent design and documentation.

For this part, my thank you goes to Ryosuke Matsumoto, Masayoshi Takahashi, Masaki TAGAWA.

cache-aware server push

Server-push is an important aspect of HTTP/2, however it has generally believed to be hard to use, since web application do not have the knowledge of what has already been cached on the client-side.

With the help of Ilya Grigorik and the Japanese HTTP/2 community, we have essentially solved the issue by introducing cache-aware server push; the server is now capable of tracking the what the web browser has in its cache, and determine whether or not a resource should be pushed!

We plan to improve the feature in the upcoming releases so that the Web can be even faster!

isolation of private keys

H2O now implements privilege isolation for handling RSA private key operations so that SSL private keys would not leak in case of vulnerabilities such as Heartbleed.

In the upcoming days I will post several blogposts explaining the notable changes. Stay tuned.

ラベル:

C,

H2O,

HTTP,

in English,

security,

un*x,

web architecture

Thursday, September 24, 2015

Neverbleed - RSAの秘密鍵演算を別プロセスに分離する話

機能毎にプロセスを分割し、それらを別個の権限のもとで実行することで、脆弱性があった場合の影響を抑え込むというのは、一定以上の規模をもつプログラムでは、しばしば見られるデザインパターンです。

qmailは、そのような設計がなされたメール配送デーモンとして名高いですし、OpenSSHもまた、認証プロセスと通信プロセスを分離することで、外部との通信を担当するコードにバグがあったとしても、ルート権限が奪われないように設計されています(参照: Privilege Separated OpenSSH)。

一方で、OpenSSLにはそのような権限分離は実装されていません。Heartbleedの際にサーバの秘密鍵が漏洩したのも、秘密鍵の取り扱いと、その他の通信の取り扱いを同一のメモリ空間の中で行っていたからだと考えることができます。

ないのなら、自分で作ればいいじゃない…ということで作りました。それが、Neverbleedです。

Neverbleedは、OpenSSLの拡張インターフェイスであるEngineを利用して、RSA秘密鍵を用いる処理を専用プロセスに分離します。OpenSSLの初期化時に専用プロセスを起動し、秘密鍵の読み込みと関連演算は全て専用プロセスで行われるため、OpenSSLを利用するサーバプロセスに脆弱性があったとしても秘密鍵が漏洩することはありません。

OpenSSLの拡張インターフェイスを利用しているため、OpenSSLへの変更は不要ですし、サーバプログラムへの変更もごく少量ですみます。また、専用プロセスとの通信のオーバーヘッドはRSAの秘密鍵演算と比べると非常に小さいため、そのオーバーヘッドは問題になりません。

そんな感じでうまく動いているので、Neverbleedは、今月リリース予定のH2Oバージョン1.5に組み込まれる予定です。

参照: http://www.citi.umich.edu/u/provos/ssh/privsep.html

qmailは、そのような設計がなされたメール配送デーモンとして名高いですし、OpenSSHもまた、認証プロセスと通信プロセスを分離することで、外部との通信を担当するコードにバグがあったとしても、ルート権限が奪われないように設計されています(参照: Privilege Separated OpenSSH)。

一方で、OpenSSLにはそのような権限分離は実装されていません。Heartbleedの際にサーバの秘密鍵が漏洩したのも、秘密鍵の取り扱いと、その他の通信の取り扱いを同一のメモリ空間の中で行っていたからだと考えることができます。

ないのなら、自分で作ればいいじゃない…ということで作りました。それが、Neverbleedです。

Neverbleedは、OpenSSLの拡張インターフェイスであるEngineを利用して、RSA秘密鍵を用いる処理を専用プロセスに分離します。OpenSSLの初期化時に専用プロセスを起動し、秘密鍵の読み込みと関連演算は全て専用プロセスで行われるため、OpenSSLを利用するサーバプロセスに脆弱性があったとしても秘密鍵が漏洩することはありません。

OpenSSLの拡張インターフェイスを利用しているため、OpenSSLへの変更は不要ですし、サーバプログラムへの変更もごく少量ですみます。また、専用プロセスとの通信のオーバーヘッドはRSAの秘密鍵演算と比べると非常に小さいため、そのオーバーヘッドは問題になりません。

そんな感じでうまく動いているので、Neverbleedは、今月リリース予定のH2Oバージョン1.5に組み込まれる予定です。

参照: http://www.citi.umich.edu/u/provos/ssh/privsep.html

Wednesday, September 16, 2015

Directory traversal vulnerability found in H2O

A directory traversal vulnerability has been found in H2O. Users are advised to update immediately.

https://h2o.examp1e.net/vulnerabilities.html#CVE-2015-5638

EDIT. I am sorry to have included an information leakage vulnerability in my software. Information leakage vulnerability consists of two categories: file leakage and memory leakage. Today we have fixed the former; there are no known vulnerabilities that need to be fixed.

However, considering the fact that it is hard to prove that there is no memory leakage vulnerability, we are going to implement privilege separation for handling TLS private keys in the upcoming 1.5 release just in case so that the private keys would not get exposed even if such vulnerability exist.

https://h2o.examp1e.net/vulnerabilities.html#CVE-2015-5638

EDIT. I am sorry to have included an information leakage vulnerability in my software. Information leakage vulnerability consists of two categories: file leakage and memory leakage. Today we have fixed the former; there are no known vulnerabilities that need to be fixed.

However, considering the fact that it is hard to prove that there is no memory leakage vulnerability, we are going to implement privilege separation for handling TLS private keys in the upcoming 1.5 release just in case so that the private keys would not get exposed even if such vulnerability exist.

Thursday, July 23, 2015

前方秘匿性 (forward secrecy) をもつウェブサイトの正しい設定方法

前方秘匿性(forward secrecy)とは、以下のような性質を指します。

鍵が攻撃者や諜報機関など第三者の知るところとなった場合でも、それまで通信していた暗号文が解読されないようにしないといけない、という考え方とともに、最近 HTTPS を利用するウェブサイトにおいても導入が求められるようになってきた概念です。

前方秘匿性を満たすウェブサイトの設定方法については、TLSの暗号化方式をECDH_RSAあるいはECDHE_RSAに設定すれば良い、と述べている文献が多いです。

ですが、ほとんどのウェブサーバにおいて、それは誤りです。

なぜか。

通信を暗号化する鍵(セッション鍵)の確立にDHEあるいはECDHEを用いれば前方秘匿性が確保されるというのは、鍵交換に議論を限ってしまえば正しいです。

しかし、TLSプロトコルには、鍵交換以外にセッション鍵を保存し、再利用する仕組みが存在します。Session ResumptionとSession Ticketの2つがそれに該当します。

このうち、OpenSSLが標準でサポートし、ウェブブラウザが対応している場合(IE9以前とMobile Safari以外の全ての主要ウェブブラウザが該当します)にSession Resumptionより優先して利用されるSession Ticketは、セッション鍵をサーバのみが知るマスターキーで暗号化して、クライアントに送信します。

セッション鍵をマスターキーで暗号化…いやな響きがしませんか?

そうです。このSession Ticketの暗号化に使われるマスターキーを定期的に更新しない限り、マスターキーが流出してしまった場合、過去の通信内容がすべて解読されてしまうことになるのです。

しかし残念なことに、ほとんどのウェブサーバは、マスターキーを自動的に更新する仕組みをそなえていません。

では、どのようにサーバを設定すればいいでしょうか。

解決策1. Session Ticket機能を無効化する

第一の解決策はウェブサーバの設定でSession Ticket機能を無効化することです。Apache の場合は SSLSessionTickets、nginx の場合は ssl_session_tickets という設定コマンドを使うことで、無効化することができます。

しかし、nginx (とevent mpmを使用するApache)は、複数サーバにまたがる session resumption 機能を実装していません。そのため、ロードバランサの背後に複数のHTTPSサーバを立てている場合は、session ticket をオフにすると、新規接続確立のたびにTLSハンドシェイクが発生し、ユーザの体感速度の低下やサーバの負荷増大につながってしまいます。

解決策2. サーバを定期的に再起動する

ApacheやNginxは、サーバを起動するタイミングで Session Ticket のマスターキーを再生成します。なので、たとえば1時間に1回サーバを再起動すれば、前方秘匿性を確保しつつ Session Ticket を使うことができます。簡単ですね!!!

なお、ロードバランサの背後に複数のHTTPSサーバを立てている場合は、それらのサーバを再起動する度に、新しいマスターキーが記述された設定ファイルを再配布する必要があります。このへんは Twitter さんの涙ぐましい努力の話があるので、それを参考にがんばりましょう。

解決策3. H2O を使う

これまで述べてきたように、これまでのウェブサーバの前方秘匿性のサポートはお粗末と言わざるをえません(他にも、マスターキーの強度が強力なセッションキーよりも弱いといった問題も存在します)。

そこで H2O ですよ!!!!

私たちが開発しているH2Oの最新バージョンは、これらの問題を全て解決しています。

具体的に言うと、

・Session Ticket のマスターキーは、他のサーバのように AES-128 ではなく AES-256 です

・Session Ticket は自動的に更新されます(デフォルト15分間隔)

・ロードバランサの背後に複数台のH2Oを設置する場合、Session Ticketをmemcachedで共有するように設定することが可能です

・ついでに、Memcached を用いた複数サーバにまたがるSession Resumptionにも対応しているので、Mobile Safari でのHTTPSアクセスも高速に処理することができます

というわけで、安全なウェブサイトを構築したい人は H2O 使えばいいんじゃないでしょうか。ちなみに、H2O 使うとユーザの体感速度も速くなるのでいいことづくめかと思います。

以上、ウェブサーバの設定に関する注意喚起、兼、H2Oの宣伝でした。

公開鍵暗号の秘密鍵のように、比較的長期に渡って使われる鍵が漏えいしたときでも、それまで通信していた暗号文が解読されないという性質

鍵が漏れることも想定せよ――クラウド時代における「楕円曲線暗号」の必然性 - @IT

鍵が攻撃者や諜報機関など第三者の知るところとなった場合でも、それまで通信していた暗号文が解読されないようにしないといけない、という考え方とともに、最近 HTTPS を利用するウェブサイトにおいても導入が求められるようになってきた概念です。

前方秘匿性を満たすウェブサイトの設定方法については、TLSの暗号化方式をECDH_RSAあるいはECDHE_RSAに設定すれば良い、と述べている文献が多いです。

ですが、ほとんどのウェブサーバにおいて、それは誤りです。

なぜか。

通信を暗号化する鍵(セッション鍵)の確立にDHEあるいはECDHEを用いれば前方秘匿性が確保されるというのは、鍵交換に議論を限ってしまえば正しいです。

しかし、TLSプロトコルには、鍵交換以外にセッション鍵を保存し、再利用する仕組みが存在します。Session ResumptionとSession Ticketの2つがそれに該当します。

このうち、OpenSSLが標準でサポートし、ウェブブラウザが対応している場合(IE9以前とMobile Safari以外の全ての主要ウェブブラウザが該当します)にSession Resumptionより優先して利用されるSession Ticketは、セッション鍵をサーバのみが知るマスターキーで暗号化して、クライアントに送信します。

セッション鍵をマスターキーで暗号化…いやな響きがしませんか?

そうです。このSession Ticketの暗号化に使われるマスターキーを定期的に更新しない限り、マスターキーが流出してしまった場合、過去の通信内容がすべて解読されてしまうことになるのです。

しかし残念なことに、ほとんどのウェブサーバは、マスターキーを自動的に更新する仕組みをそなえていません。

では、どのようにサーバを設定すればいいでしょうか。

解決策1. Session Ticket機能を無効化する

第一の解決策はウェブサーバの設定でSession Ticket機能を無効化することです。Apache の場合は SSLSessionTickets、nginx の場合は ssl_session_tickets という設定コマンドを使うことで、無効化することができます。

しかし、nginx (とevent mpmを使用するApache)は、複数サーバにまたがる session resumption 機能を実装していません。そのため、ロードバランサの背後に複数のHTTPSサーバを立てている場合は、session ticket をオフにすると、新規接続確立のたびにTLSハンドシェイクが発生し、ユーザの体感速度の低下やサーバの負荷増大につながってしまいます。

解決策2. サーバを定期的に再起動する

ApacheやNginxは、サーバを起動するタイミングで Session Ticket のマスターキーを再生成します。なので、たとえば1時間に1回サーバを再起動すれば、前方秘匿性を確保しつつ Session Ticket を使うことができます。簡単ですね!!!

なお、ロードバランサの背後に複数のHTTPSサーバを立てている場合は、それらのサーバを再起動する度に、新しいマスターキーが記述された設定ファイルを再配布する必要があります。このへんは Twitter さんの涙ぐましい努力の話があるので、それを参考にがんばりましょう。

解決策3. H2O を使う

これまで述べてきたように、これまでのウェブサーバの前方秘匿性のサポートはお粗末と言わざるをえません(他にも、マスターキーの強度が強力なセッションキーよりも弱いといった問題も存在します)。

そこで H2O ですよ!!!!

私たちが開発しているH2Oの最新バージョンは、これらの問題を全て解決しています。

具体的に言うと、

・Session Ticket のマスターキーは、他のサーバのように AES-128 ではなく AES-256 です

・Session Ticket は自動的に更新されます(デフォルト15分間隔)

・ロードバランサの背後に複数台のH2Oを設置する場合、Session Ticketをmemcachedで共有するように設定することが可能です

・ついでに、Memcached を用いた複数サーバにまたがるSession Resumptionにも対応しているので、Mobile Safari でのHTTPSアクセスも高速に処理することができます

というわけで、安全なウェブサイトを構築したい人は H2O 使えばいいんじゃないでしょうか。ちなみに、H2O 使うとユーザの体感速度も速くなるのでいいことづくめかと思います。

以上、ウェブサーバの設定に関する注意喚起、兼、H2Oの宣伝でした。

Wednesday, July 22, 2015

H2O version 1.4.0 released with outstanding support for forward secrecy and load balancing (and the experimental mruby handler)

Today I am happy to announce the release of the H2O HTTP/2 server version 1.4.0.

There have been a few changes and bug fixes from version 1.3.1 (that showed big performance improvements over the older generations of HTTP servers without support for request prioritization), but the most prominent ones are the following.

Support for the PROXY protocol

The PROXY protocol is a de-facto standard protocol used by L4 load balancers (such as AWS Elastic Load Balancing) to notify the web servers running behind them the IP addresses of the clients. Support for the protocol is essential for running a web server behind such load balancer; without the support it is impossible to log the address of the client or to work against attacks.

In version 1.4.0, we have added support for the protocol which makes H2O a good choice for large scale and/or highly-available web sites running multiple HTTP servers behind a load balancer.

Support for cache-based and ticket-based TLS session resumption using Memcached (and forward secrecy)

The PROXY protocol is not the only thing that now makes H2O a good choice for such websites.

When running a HTTPS server cluster behind a L4 load balancer, it is desirable that the server supports session resumption using a shared datastore such as memcached.

TLS Session Resumption: Full-speed and Secure is a good read for those who are interested in what session resumption is; in short, it reduces the time spent for establishing a TLS connection to about half, and also reduces the CPU time to below 10%!

However, until now, front-end HTTP servers have been not good at supporting session resumption using a shared datastore.

Among the two resumption methods, Nginx does not support the more-widely-deployed cache-based session resumption using a shared datastore.

It should also be noted that most web servers are not good at supporting ticket-based resumption; they use 128-bit AES for storing master secrets (even in cases when a more complex ciphersuite is used), and also do not automatically roll-over the secrets (which botches forward-secrecy).

As pointed out by Tim Taubert, the sad state of the server-side TLS session resumption implementations has been the headache of administrators trying to setup secure websites. Forward Secrecy at Twitter is an example that shows how difficult it is to configure a website supporting forward-secrecy.

Being the primary developer of H2O, I believe that web servers should be easily configurable to be secure; so in version 1.4.0 we have implemented the following features:

Table 1.Supported Methods of Session Resumption

And with H2O, they are easy to use! A simple configuration like below activates all the features. The H2O server cluster will share information of both cache-based and ticket-based session resumption using memcached, with complex cipher used for protecting master secrets that are automatically rolled over.

Please refer to the documentation for the details of the configuration directive.

Experimental mruby Handler

We are also proud to announce that we now have a scripting engine running within the H2O standalone server that can be used to customize the behavior, and that the programming language is Ruby.

Developed by Yukihiro Matz (the father of the Ruby programming language) and others, mruby is an implementation of the language for embedded use. Thanks to MATSUMOTO Ryosuke the language runtime can now be used to script how the HTTP requests should be handled within H2O.

The handler is still in very early stages and considered unstable (therefore it is not turned on by default, you would need to pass

Please refer to Ryosuke's weblog for more information (in Japanese). In addition to topics related to H2O, you can find excellent entries about how to use a scripting engine within web servers to work against cyber attacks.

Conclusion

All in all, we are happy to provide a new release of the H2O server to the public, that is secure and easy to use (with flexibility), once again raising the bar of what people should expect on a HTTP server to provide.

I hope you enjoy using the new release of H2O.

There have been a few changes and bug fixes from version 1.3.1 (that showed big performance improvements over the older generations of HTTP servers without support for request prioritization), but the most prominent ones are the following.

Support for the PROXY protocol

The PROXY protocol is a de-facto standard protocol used by L4 load balancers (such as AWS Elastic Load Balancing) to notify the web servers running behind them the IP addresses of the clients. Support for the protocol is essential for running a web server behind such load balancer; without the support it is impossible to log the address of the client or to work against attacks.

In version 1.4.0, we have added support for the protocol which makes H2O a good choice for large scale and/or highly-available web sites running multiple HTTP servers behind a load balancer.

Support for cache-based and ticket-based TLS session resumption using Memcached (and forward secrecy)

The PROXY protocol is not the only thing that now makes H2O a good choice for such websites.

When running a HTTPS server cluster behind a L4 load balancer, it is desirable that the server supports session resumption using a shared datastore such as memcached.

TLS Session Resumption: Full-speed and Secure is a good read for those who are interested in what session resumption is; in short, it reduces the time spent for establishing a TLS connection to about half, and also reduces the CPU time to below 10%!

However, until now, front-end HTTP servers have been not good at supporting session resumption using a shared datastore.

Among the two resumption methods, Nginx does not support the more-widely-deployed cache-based session resumption using a shared datastore.

It should also be noted that most web servers are not good at supporting ticket-based resumption; they use 128-bit AES for storing master secrets (even in cases when a more complex ciphersuite is used), and also do not automatically roll-over the secrets (which botches forward-secrecy).

As pointed out by Tim Taubert, the sad state of the server-side TLS session resumption implementations has been the headache of administrators trying to setup secure websites. Forward Secrecy at Twitter is an example that shows how difficult it is to configure a website supporting forward-secrecy.

Being the primary developer of H2O, I believe that web servers should be easily configurable to be secure; so in version 1.4.0 we have implemented the following features:

- cache-based session resumption using memcached

- automatic rollover of master secret used for ticket-based resumption

- synchronization of master secrets that rollover, using memcached

- directive to configure the cipher used for encrypting tickets (with default being

aes-256-cbc)

| Resumption Method | ||

|---|---|---|

| Session Resumption | Session Ticket | |

| Apache (prefork) | yes | no forward-secrecy (AES-128) |

| Apache (worker) | yes | no forward-secrecy (AES-128) |

| Apache (event) | not sharable | no forward-secrecy (AES-128) |

| Nginx | not sharable | no forward-secrecy (AES-128) |

| Varnish (hitch) | needs recompile | no |

| H2O | yes | yes (AES-256) |

And with H2O, they are easy to use! A simple configuration like below activates all the features. The H2O server cluster will share information of both cache-based and ticket-based session resumption using memcached, with complex cipher used for protecting master secrets that are automatically rolled over.

listen:

port: 443

ssl:

key-file: /path/to/key-file

certificate-file: /path/to/certificate-file

proxy-protocol: ON

ssl-session-resumption:

method: all

memcached:

host: address.of.memcached.server

port: 11211

Please refer to the documentation for the details of the configuration directive.

Experimental mruby Handler

We are also proud to announce that we now have a scripting engine running within the H2O standalone server that can be used to customize the behavior, and that the programming language is Ruby.

Developed by Yukihiro Matz (the father of the Ruby programming language) and others, mruby is an implementation of the language for embedded use. Thanks to MATSUMOTO Ryosuke the language runtime can now be used to script how the HTTP requests should be handled within H2O.

The handler is still in very early stages and considered unstable (therefore it is not turned on by default, you would need to pass

-DWITH_MRUBY=ON as an argument to CMake to build H2O with support for the mruby handler), but nevertheless it is already a great addition to the H2O HTTP server; such a scripting engine gives you great flexibility to customize the behavior of the server depending on the tiny aspects of a HTTP request, or to mitigate attacks.Please refer to Ryosuke's weblog for more information (in Japanese). In addition to topics related to H2O, you can find excellent entries about how to use a scripting engine within web servers to work against cyber attacks.

Conclusion

All in all, we are happy to provide a new release of the H2O server to the public, that is secure and easy to use (with flexibility), once again raising the bar of what people should expect on a HTTP server to provide.

I hope you enjoy using the new release of H2O.

Thursday, June 18, 2015

H2OとPHPを組み合わせるの、超簡単です(もしくはmod_rewriteが不要な理由)

FastCGI対応機能がH2Oにマージされたことを受けて、uzullaさんが「H2OでPHP(がちょっとだけ動くまで)」という記事を書いてくださっています。

ありがたやありがたや。

その中で、

ちなみにこの手のことをNginxでやろうとするともっと難しくて、以下のように

こんな難しい設定、書けるわけないだろうが!!!!!!!

というわけで、H2Oの場合はもっと簡単に設定できるようになっています。以下のような感じ。

単純ですね。どうなってるかはコメントを見れば自明かとも思いますが、以下解説します。

実は、H2Oの設定ファイルにおいては、1つのパスに複数のハンドラを設定することができるのです。

複数のハンドラが設定されている場合、H2O は有効なレスポンスが生成できるまで、ハンドラを順次実行していきます。つまり、コメントにあるように、ファイルが存在すればそれを返すし、存在しなければ /index.php/ 以下にリクエストを内部的に転送し、その転送した結果の応答をクライアントに返すわけです。

わかりやすいですね。簡単ですね。

少し理屈っぽい話をすると、mod_rewriteのようなリクエストを書き換える黒魔術は、おおむね3種類の目的に使われてきました。第1の目的は、複数のローカルのディレクトリツリーを重ね合わせてひとつのhttpサイトとして表示すること。第2の目的は、uzullaさんが指摘したような、特定の条件をもつ以外のURLの処理を、FastCGIやアプリケーションサーバに委譲すること。第3の目的は、User-Agentや言語設定によって異なる応答を返すこと。

ですが、前2者を実現するためだけならば、正規表現を用いてURLを書き換えるような手法は過剰にすぎて管理がしづらかったわけです。H2OにおけるPHP対応においては、この点を意識し、複数のハンドラの連鎖を可能にすることで、設定が安全簡潔にできるようにしたのでした。

ちなみに、uzullaさんの例では

最後になりますが、ここで説明したFastCGI対応機能を盛り込んだH2Oバージョン1.3は今朝方リリースされたので、PHPerの皆様もそれ以外の皆様も、お試しいただければ幸甚に存じます。

リンク先のリリース告知エントリにも書いてあるけど、他のウェブサーバより表示速度(first-paint time)が、かなり速いですよ!

ありがたやありがたや。

その中で、

いやはや、まったくそのとおりなわけです。http://hoge/entry/1みたいなのをphpにマップする方法はまだよくわかってません。その内しらべます

github.comを読む限り

FastCGI (or PHP) applications should be as easily configurable as it is for the Apache HTTP serverということで、やったぜ!ってなるんですけど、nginxはもとより、Apacheにおいても現状ルーターをつかっているようなアプリだとhtaccessをいちいちかかないといけないので、Apacheみたいなスタイルが楽なのか?というとちょっと疑問があります。

(たとえば以下みたいなの)

RewriteEngine On RewriteCond %{REQUEST_FILENAME} !-f RewriteRule ^ index.php [QSA,L]ここらへんがスッっとかけないと、まあApache、nginx同様にH2Oの設定のタレが必要になるかなあ、という感じはあります。

ちなみにこの手のことをNginxでやろうとするともっと難しくて、以下のように

try_filesやfastcgi_split_path_infoを使わないと脆弱性が発生するなんて話があるそうです(参照: Setting up PHP-FastCGI and nginx? Don’t trust the tutorials: check your configuration! » Neal Poole)。これ、みんなちゃんとできてるんですかね?# Pass all .php files onto a php-fpm/php-fcgi server.

location ~ \.php$ {

try_files $uri =404;

fastcgi_split_path_info ^(.+\.php)(/.+)$;

include fastcgi_params;

fastcgi_index index.php;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_pass php;

}こんな難しい設定、書けるわけないだろうが!!!!!!!

というわけで、H2Oの場合はもっと簡単に設定できるようになっています。以下のような感じ。

paths:

"/":

# /path/to/doc-root以下の静的ファイルを返す(存在した場合)

file.dir: /path/to/doc-root

# 存在しなければ、/index.php/ に内部転送

redirect:

url: /index.php/

internal: YES

status: 307

単純ですね。どうなってるかはコメントを見れば自明かとも思いますが、以下解説します。

実は、H2Oの設定ファイルにおいては、1つのパスに複数のハンドラを設定することができるのです。

複数のハンドラが設定されている場合、H2O は有効なレスポンスが生成できるまで、ハンドラを順次実行していきます。つまり、コメントにあるように、ファイルが存在すればそれを返すし、存在しなければ /index.php/ 以下にリクエストを内部的に転送し、その転送した結果の応答をクライアントに返すわけです。

わかりやすいですね。簡単ですね。

少し理屈っぽい話をすると、mod_rewriteのようなリクエストを書き換える黒魔術は、おおむね3種類の目的に使われてきました。第1の目的は、複数のローカルのディレクトリツリーを重ね合わせてひとつのhttpサイトとして表示すること。第2の目的は、uzullaさんが指摘したような、特定の条件をもつ以外のURLの処理を、FastCGIやアプリケーションサーバに委譲すること。第3の目的は、User-Agentや言語設定によって異なる応答を返すこと。

ですが、前2者を実現するためだけならば、正規表現を用いてURLを書き換えるような手法は過剰にすぎて管理がしづらかったわけです。H2OにおけるPHP対応においては、この点を意識し、複数のハンドラの連鎖を可能にすることで、設定が安全簡潔にできるようにしたのでした。

ちなみに、uzullaさんの例では

php-fpmを使っていますが、H2Oはphp-cgiを自分で管理することができるので、小規模なウェブサイトにおいては、file.custom-handler: extension: .php fastcgi.spawn: "PHP_FCGI_CHILDREN=10 exec /usr/bin/php-cgi"のように書くと、別途FastCGIデーモンを管理する必要がなくなって、より幸せになれるでしょう(名前は

php-cgiでも、この設定においてはFastCGIとして動作します)。最後になりますが、ここで説明したFastCGI対応機能を盛り込んだH2Oバージョン1.3は今朝方リリースされたので、PHPerの皆様もそれ以外の皆様も、お試しいただければ幸甚に存じます。

リンク先のリリース告知エントリにも書いてあるけど、他のウェブサーバより表示速度(first-paint time)が、かなり速いですよ!

H2O HTTP/2 server version 1.3.0 released; provides faster response to user, adds support for FastCGI, range-request

Today we are happy to announce the release of H2O version 1.3.0. The new release includes many changes and bug fixes since 1.2.0, but the notable ones are as follows.

Faster response to user

It is known that providing faster response to users greatly improve their experience, and many web engineers work hard on optimizing the time spent until user starts seeing the contents. The theoretically easiest way to optimize the speed (without reducing the size of the data being transmitted) is to transfer essential contents first, before transmitting other data such as images.

With the finalization of HTTP/2, such approach has become practical thanks to it's dependency-based prioritization features. However, not all web browsers (and web servers) optimally prioritize the requests. The sad fact is that some of them do not prioritize the requests at all which actually leads to worse performance that HTTP/1.1 in some cases.

Since the release of version 1.2.0, we have conducted benchmark tests that measure first-paint time (time spent until the web browser starts rendering the new webpage), and have added a tuning parameter that can be turned on to optimize the first-paint time of web browsers that do not leverage the dependency-based prioritization, while not disturbing those that implement sophisticated prioritization logic.

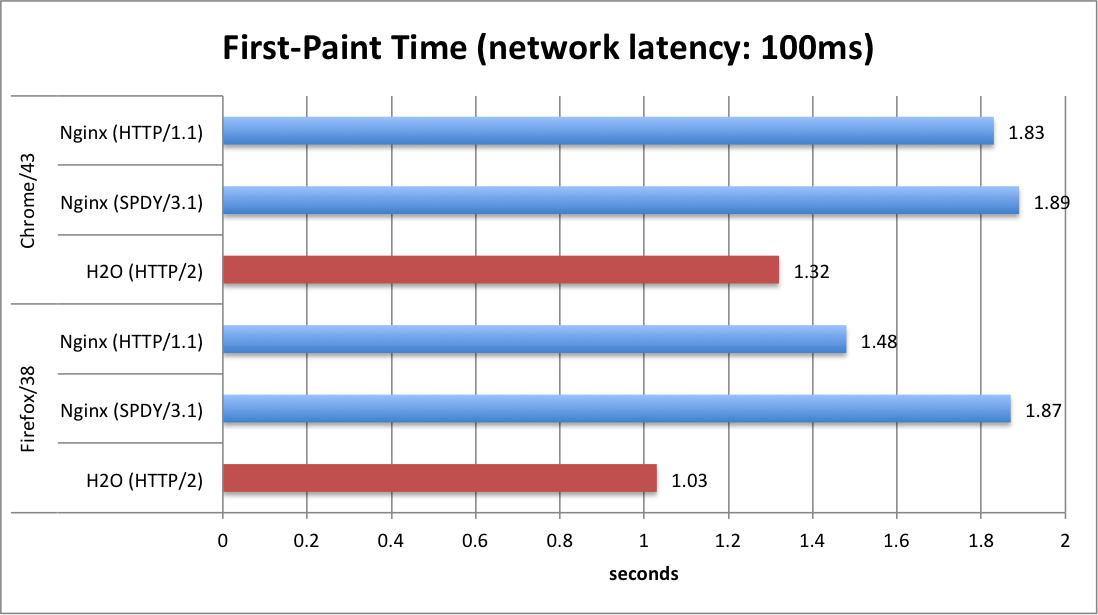

The chart below shows the first-paint time measured using a virtual network with 100ms latency (typical for 4G mobile networks), rendering a web page containing jquery, CSS and multiple image files.

It is evident that the prioritization logic implemented in H2O and the web browsers together offer a huge reduction in first-paint time. As the developer of H2O, we believe that the prioritization logic implemented in H2O to be the best of class (if not the best among all), not only implementing the specification correctly but also for having practical tweaks to optimize against the existing web browsers.

In other words, web-site administrators can provide better (or the best) user-experience to the users by switching their web server to H2O. For more information regarding the topic, please read HTTP/2 (and H2O) improves user experience over HTTP/1.1 or SPDY.

Version 1.3.0 also supports TCP fast open, an extension to TCP/IP that reduces the time required for establishing a new connection. The extension is already implemented in Linux (and Android), and is also expected to be included in iOS 9. As of H2O version 1.3.0 the feature is turned on by default to provide even quicker user experience. Kudos go to Tatsuhiko Kubo for implementing the feature.

FastCGI support

Since the initial release of H2O many users have asked for the feature; it is finally available! And we are also proud that it is easy to use.

First, it can be configured either at path-level or extension-level. The latter means that for example you can simply map

The second is the ability to launch FastCGI process manager under the control of H2O. You do not need to spawn an external FastCGI server and maintain it separately.

Using these features, for example Wordpress can be set-up just by writing few lines of configuration.

Support for range-requests

Support for range-requests (HTTP requests that request a portion of a file) is essential for serving audio/video files. Thanks to Justin Zhu it is now supported by H2O.

Conclusion

All in all, H2O has become a much better product in version 1.3 by improving end-user experience and by adding new features.

We plan to continue improving the product. Stay tuned!

Faster response to user

It is known that providing faster response to users greatly improve their experience, and many web engineers work hard on optimizing the time spent until user starts seeing the contents. The theoretically easiest way to optimize the speed (without reducing the size of the data being transmitted) is to transfer essential contents first, before transmitting other data such as images.

With the finalization of HTTP/2, such approach has become practical thanks to it's dependency-based prioritization features. However, not all web browsers (and web servers) optimally prioritize the requests. The sad fact is that some of them do not prioritize the requests at all which actually leads to worse performance that HTTP/1.1 in some cases.

Since the release of version 1.2.0, we have conducted benchmark tests that measure first-paint time (time spent until the web browser starts rendering the new webpage), and have added a tuning parameter that can be turned on to optimize the first-paint time of web browsers that do not leverage the dependency-based prioritization, while not disturbing those that implement sophisticated prioritization logic.

The chart below shows the first-paint time measured using a virtual network with 100ms latency (typical for 4G mobile networks), rendering a web page containing jquery, CSS and multiple image files.

It is evident that the prioritization logic implemented in H2O and the web browsers together offer a huge reduction in first-paint time. As the developer of H2O, we believe that the prioritization logic implemented in H2O to be the best of class (if not the best among all), not only implementing the specification correctly but also for having practical tweaks to optimize against the existing web browsers.

In other words, web-site administrators can provide better (or the best) user-experience to the users by switching their web server to H2O. For more information regarding the topic, please read HTTP/2 (and H2O) improves user experience over HTTP/1.1 or SPDY.

Version 1.3.0 also supports TCP fast open, an extension to TCP/IP that reduces the time required for establishing a new connection. The extension is already implemented in Linux (and Android), and is also expected to be included in iOS 9. As of H2O version 1.3.0 the feature is turned on by default to provide even quicker user experience. Kudos go to Tatsuhiko Kubo for implementing the feature.

FastCGI support

Since the initial release of H2O many users have asked for the feature; it is finally available! And we are also proud that it is easy to use.

First, it can be configured either at path-level or extension-level. The latter means that for example you can simply map

.php files to the FastCGI handler without writing regular expressions to extract PATH_INFO.The second is the ability to launch FastCGI process manager under the control of H2O. You do not need to spawn an external FastCGI server and maintain it separately.

Using these features, for example Wordpress can be set-up just by writing few lines of configuration.

paths:

"/":

# serve static files if found

file.dir: /path/to/doc-root

# if not found, internally redirect to /index.php/...

redirect:

url: /index.php/

internal: YES

status: 307

# handle PHP scripts using php-cgi (FastCGI mode)

file.custom-handler:

extension: .php

fastcgi.spawn: "PHP_FCGI_CHILDREN=10 exec /usr/bin/php-cgi"

Of course it is possible to configure H2O to connect to FastCGI applications externally using TCP/IP or unix sockets.Support for range-requests

Support for range-requests (HTTP requests that request a portion of a file) is essential for serving audio/video files. Thanks to Justin Zhu it is now supported by H2O.

Conclusion

All in all, H2O has become a much better product in version 1.3 by improving end-user experience and by adding new features.

We plan to continue improving the product. Stay tuned!

Wednesday, June 3, 2015

HTTP/2 (and H2O) improves user experience over HTTP/1.1 or SPDY

HTTP/2 is expected to offer better user experience than HTTP/1.1, the unanswered question is how much the benefit is in practice.

Tonight I have given a presentation regarding the issue, showing HTTP/2 performance of H2O HTTP server at shibuya.pm, a popular technology meetup at Tokyo. This blog post is a summary of the presentation at the meetup, following my recent blog post about the prioritization logic of HTTP/2 and web browser implementations.

Test Scenario:

Benchmark Results:

Analysis:

When using H2O (and HTTP/2) with optimal settings, the first-paint times are reduced by about 30% when compared to HTTP/1.1 or SPDY/3.1.

First-paint time is a good index of user experience; it shows the timing when the user sees the page being rendered for the first time (or when all assets that block rendering arrives at the client side) since he/she triggered the navigation.

The improvements are due to the fact that Firefox (or Chrome with H2O's

Conclusion:

With the benchmark showing 30% reduction in first-paint time, it is obvious that HTTP/2 (implemented by H2O) is offering superior performance against the protocols of previous generations. However, implementations might not be mature enough to offer such boost in performance (as discussed in recent blog post, Chrome has issues with its prioritization logic which is worked around by the configuration directive of H2O; Nginx does not prioritize the streams even though such feature is available in SPDY).

For the time being it is suggested that users conduct benchmarks to see if their applications actually become faster, or consult the developers of the HTTP servers for benchmark results.

Or, you can use H2O, which has been hereby shown to provide good performance under the described scenario :-)

PS. The presentation slides used at the meetup is available here:

Tonight I have given a presentation regarding the issue, showing HTTP/2 performance of H2O HTTP server at shibuya.pm, a popular technology meetup at Tokyo. This blog post is a summary of the presentation at the meetup, following my recent blog post about the prioritization logic of HTTP/2 and web browser implementations.

Test Scenario:

- a modified version of http2rulez.com (<script> tags moved into <head>)

- contains 5 CSS files

- 8 script files in <head>, including a minified version of jquery.js

- contains 18 not-blocking assets (e.g. images files), both small and large